Product

Prompt Studio

Save Prompts. Refine them over time. All private, by default.

Capture a voice from writing samples and refine prompts with AI. Prompt Studio keeps every prompt, test, and model integration on your machine.

Mix & Match Your Voice

Combine profiles to craft your perfect prompt

Your prompt

Write your prompt here...

In the product

Keep your prompts and tests in one clean workspace.

Snapshots below are representative—Prompt Studio ships with a focused editor, evaluation runs, and reports that never leave your machine.

Preview

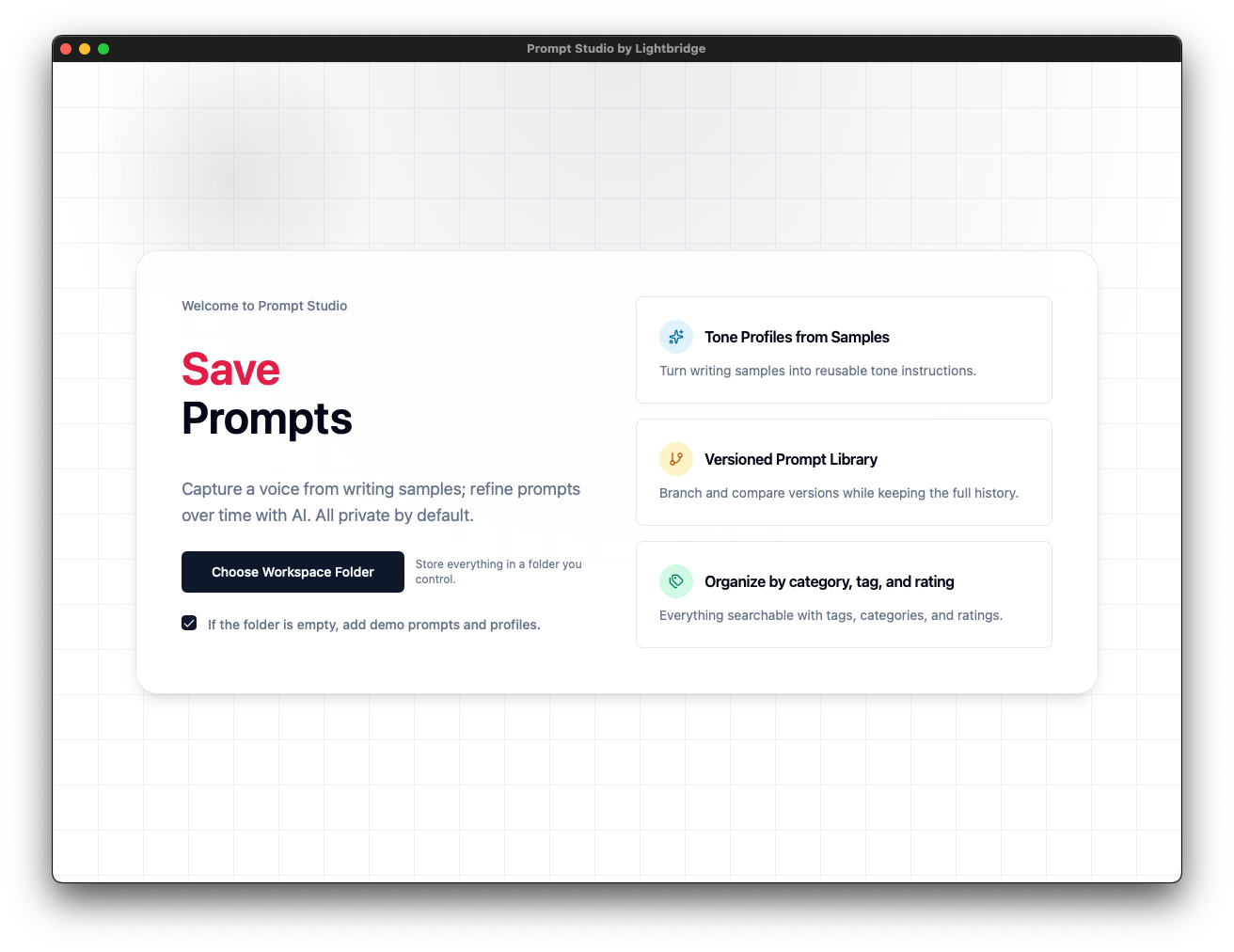

Welcome to Prompt Studio

Start with a clean workspace that highlights the core features.

Preview

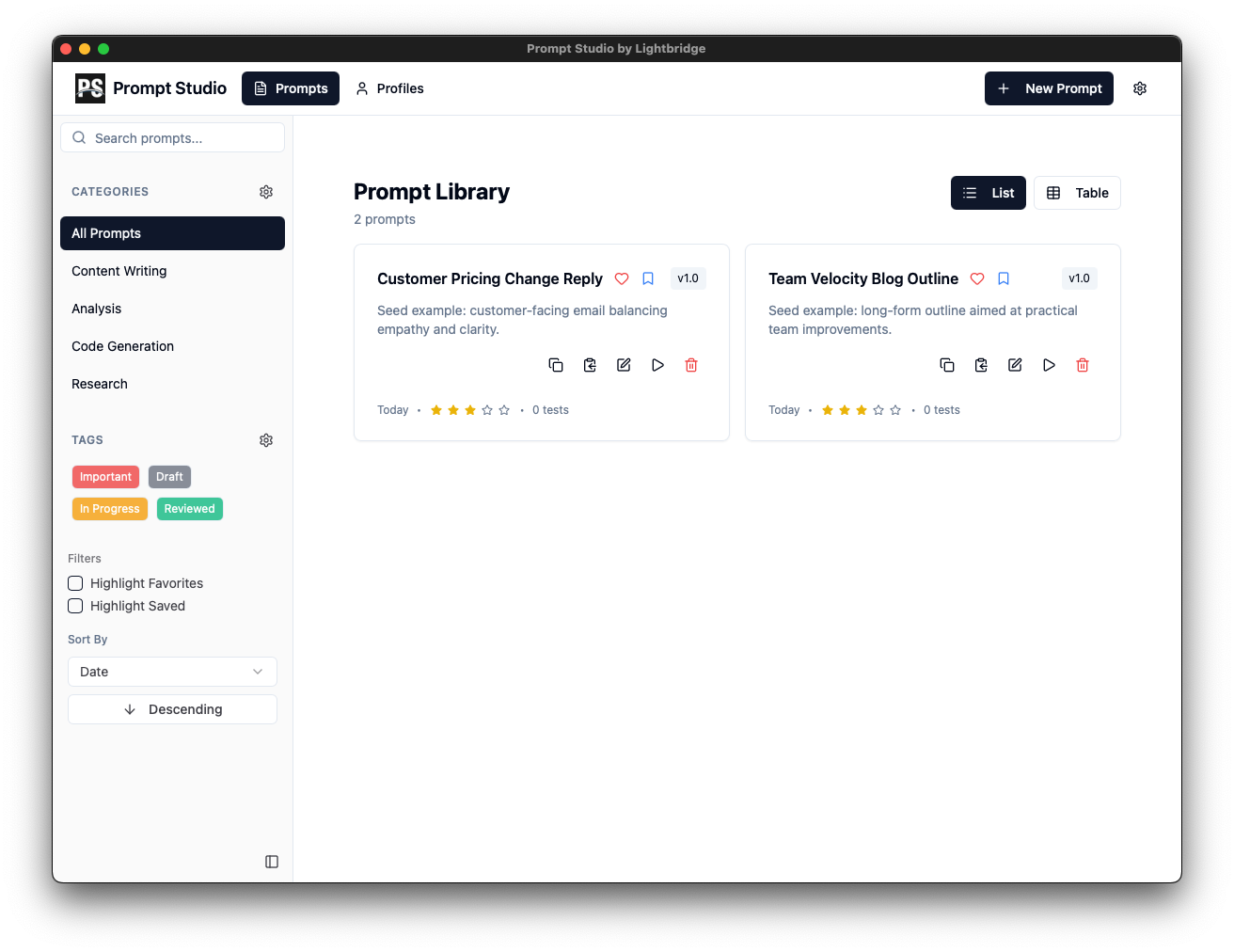

Prompt Library

Browse and organize all your prompts with version history.

Preview

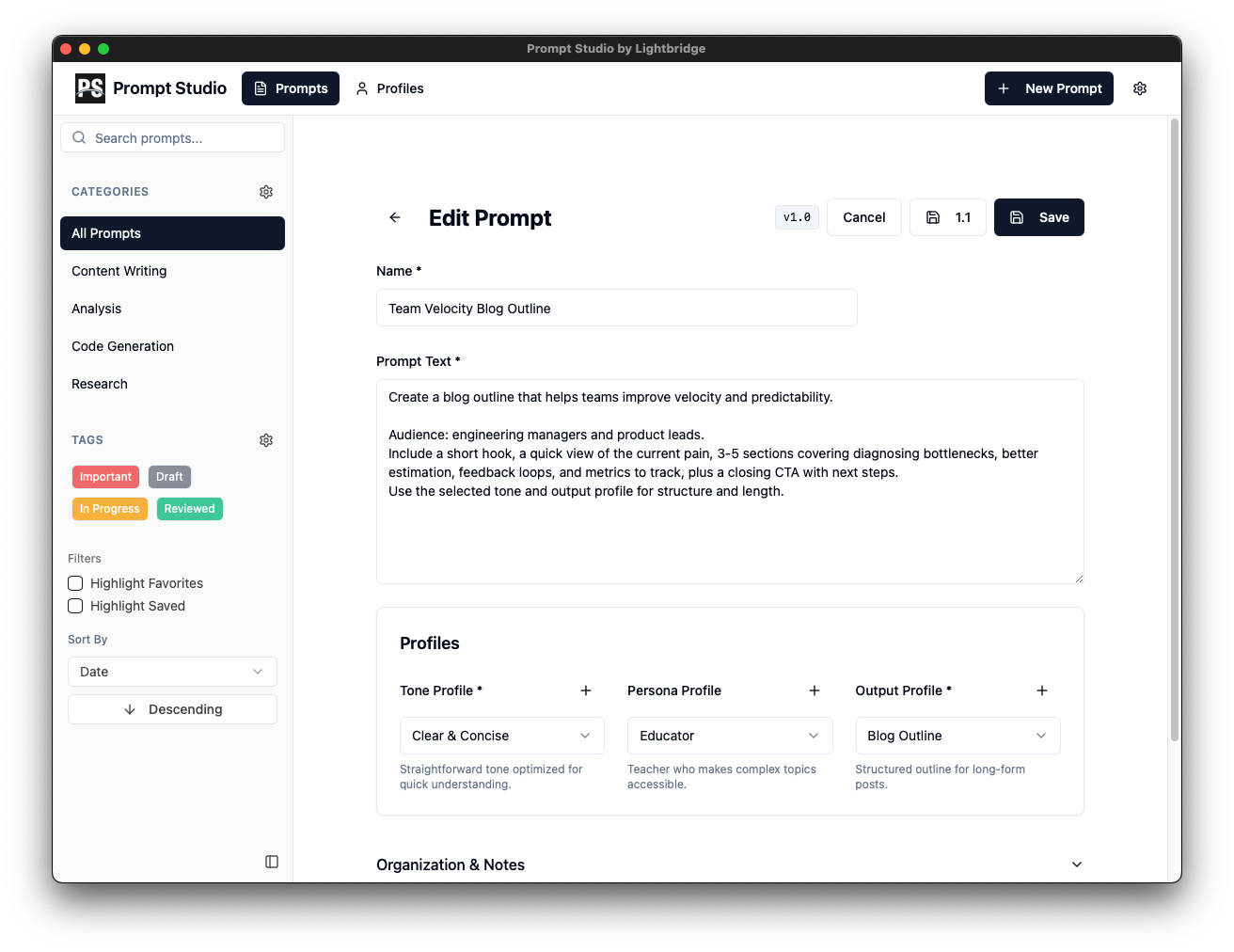

Edit and Test Prompts

Refine prompts in a dedicated editor with real-time testing.

Preview

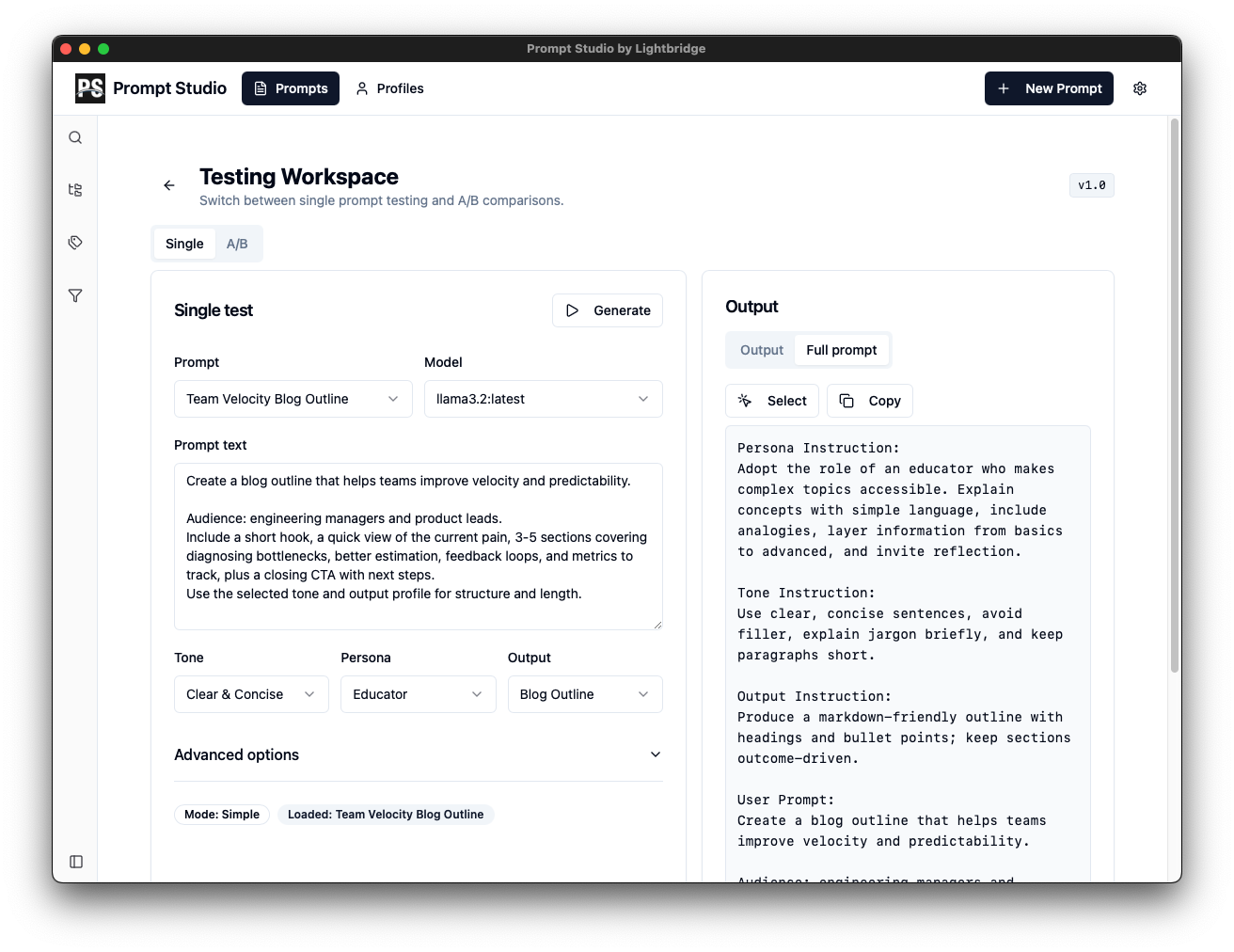

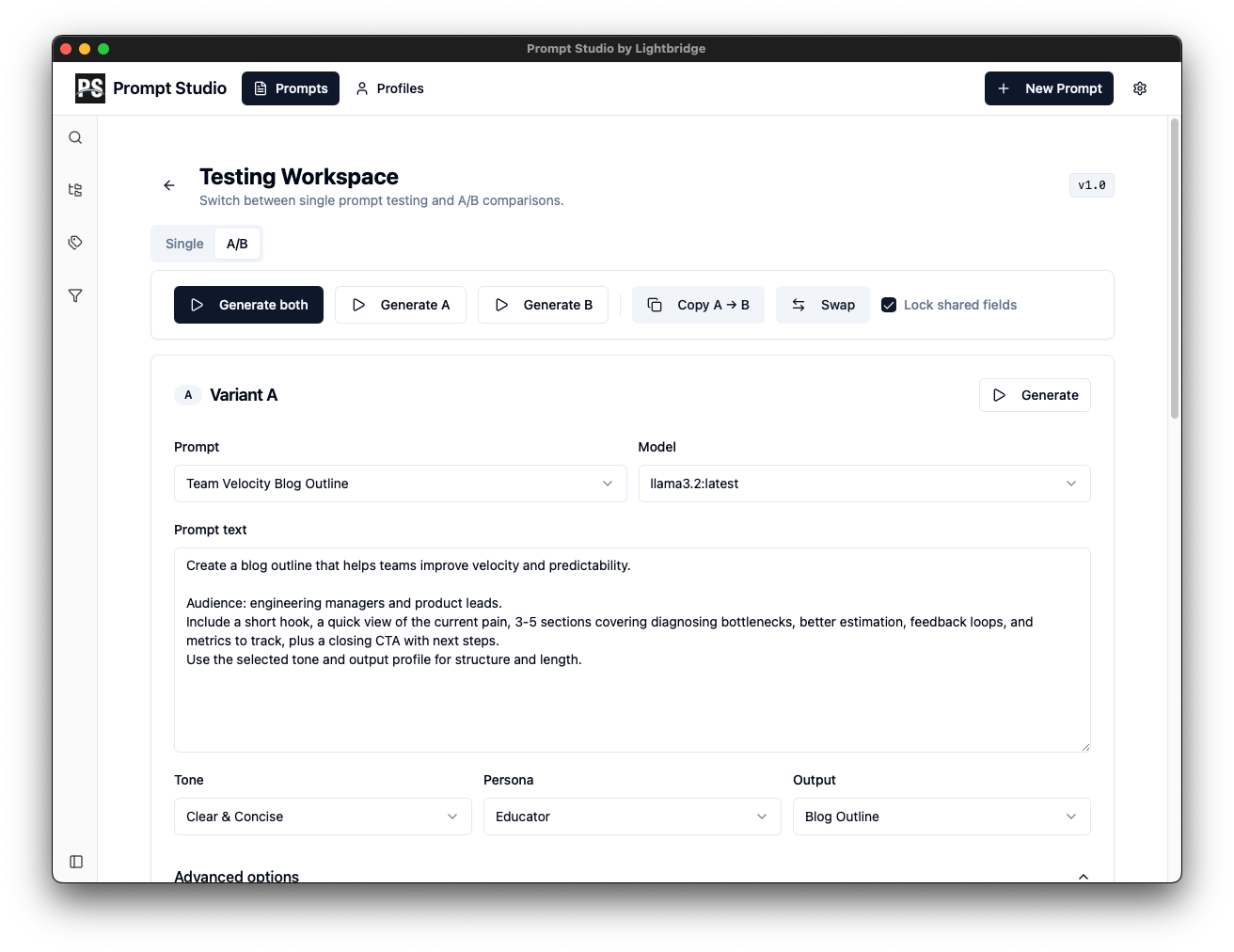

Prompt Testing Workspace

Test prompts against different scenarios and model configurations.

Preview

A/B Test Prompts

Compare prompt variants side-by-side to find what works best.

Preview

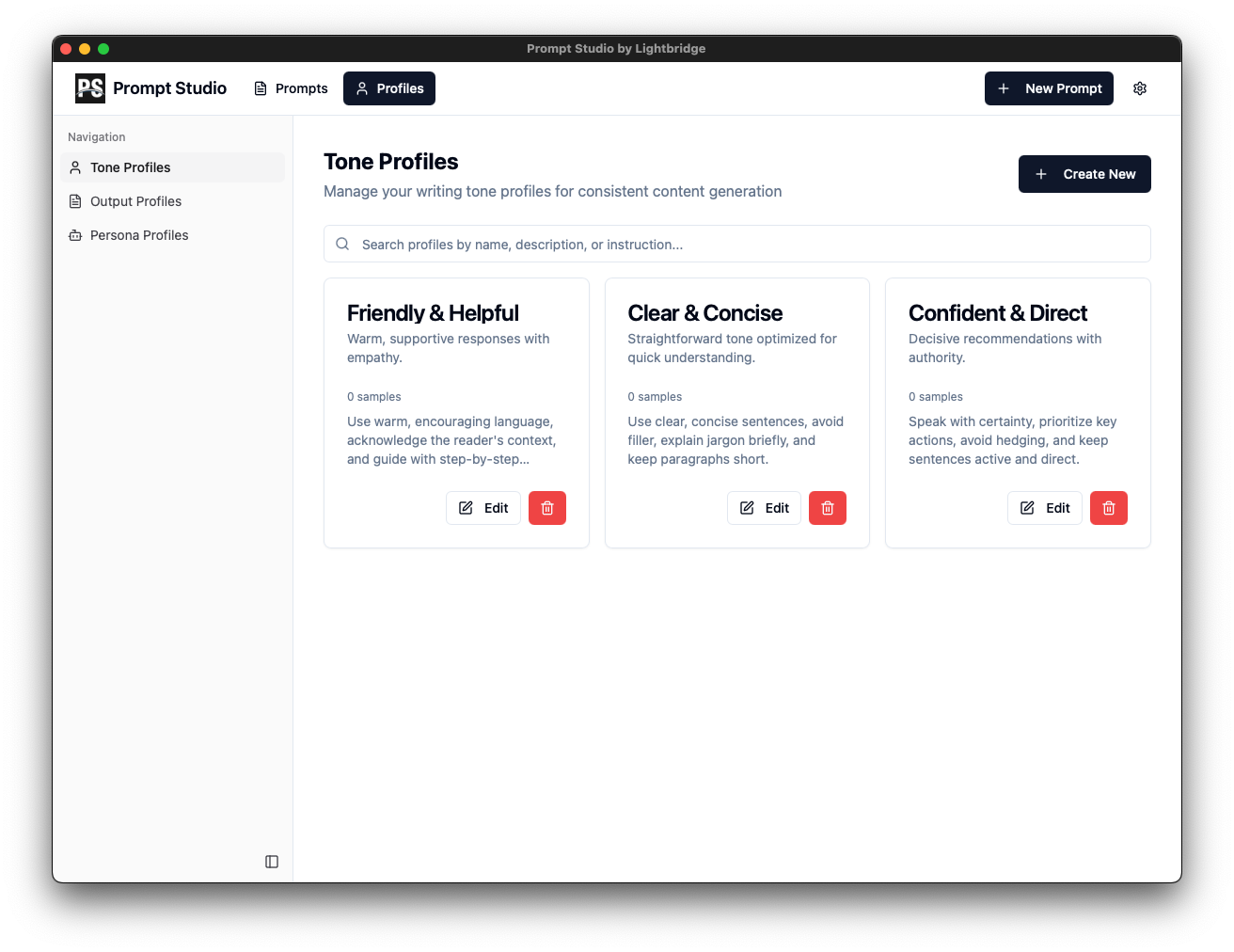

Tone Profiles

Turn writing samples into reusable tone instructions.

Preview

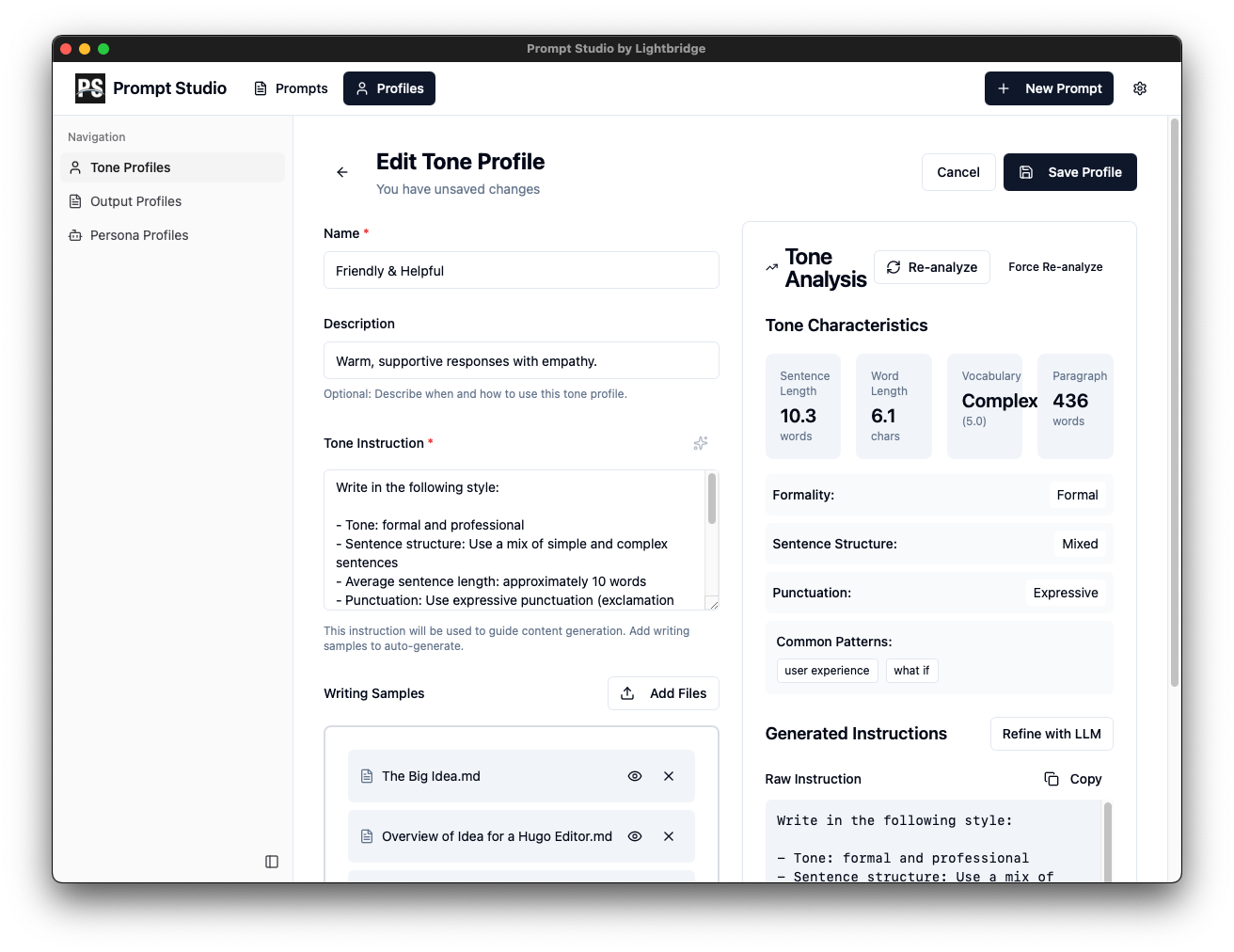

Edit Tone Profiles

Create and refine tone profiles from your writing samples.

Preview

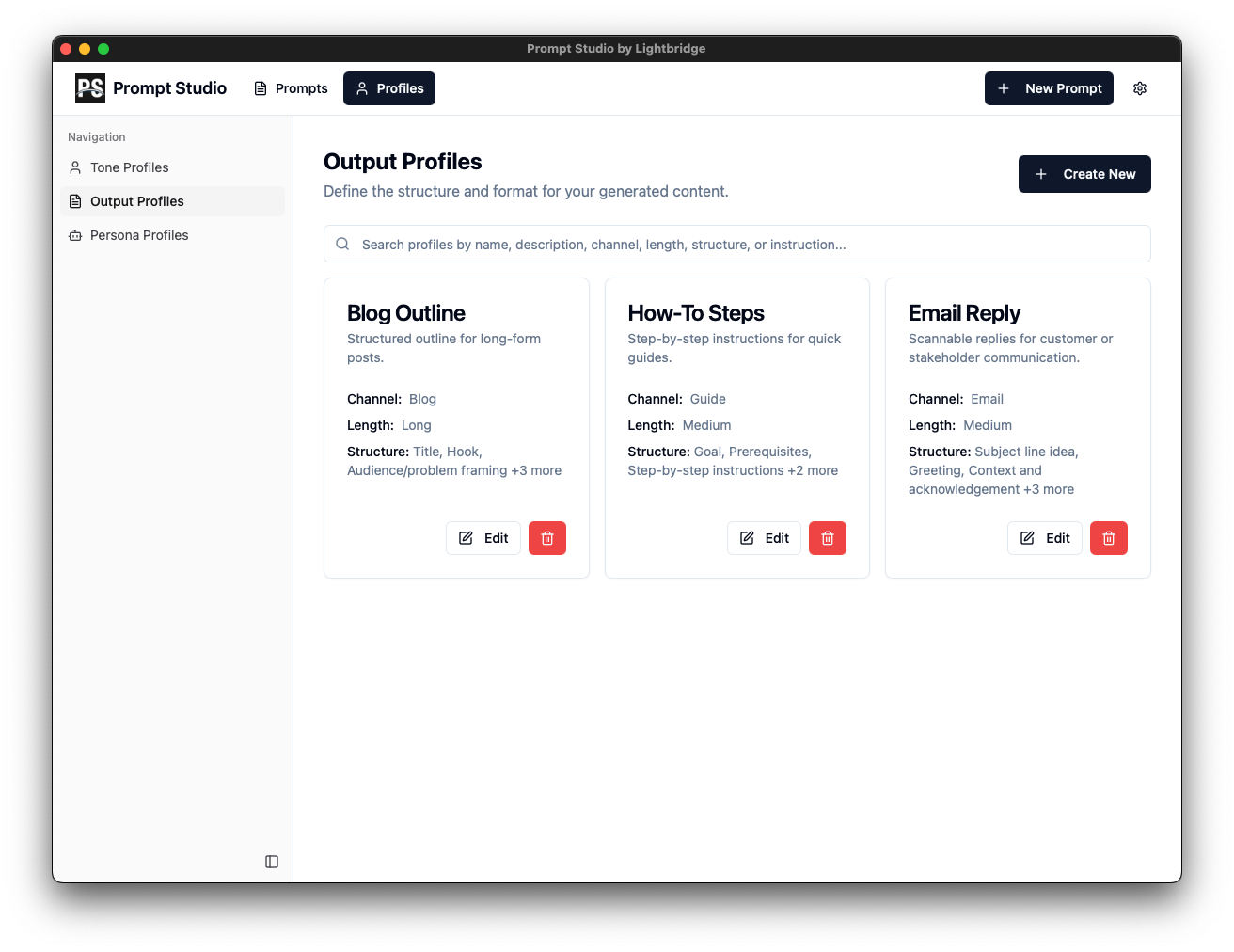

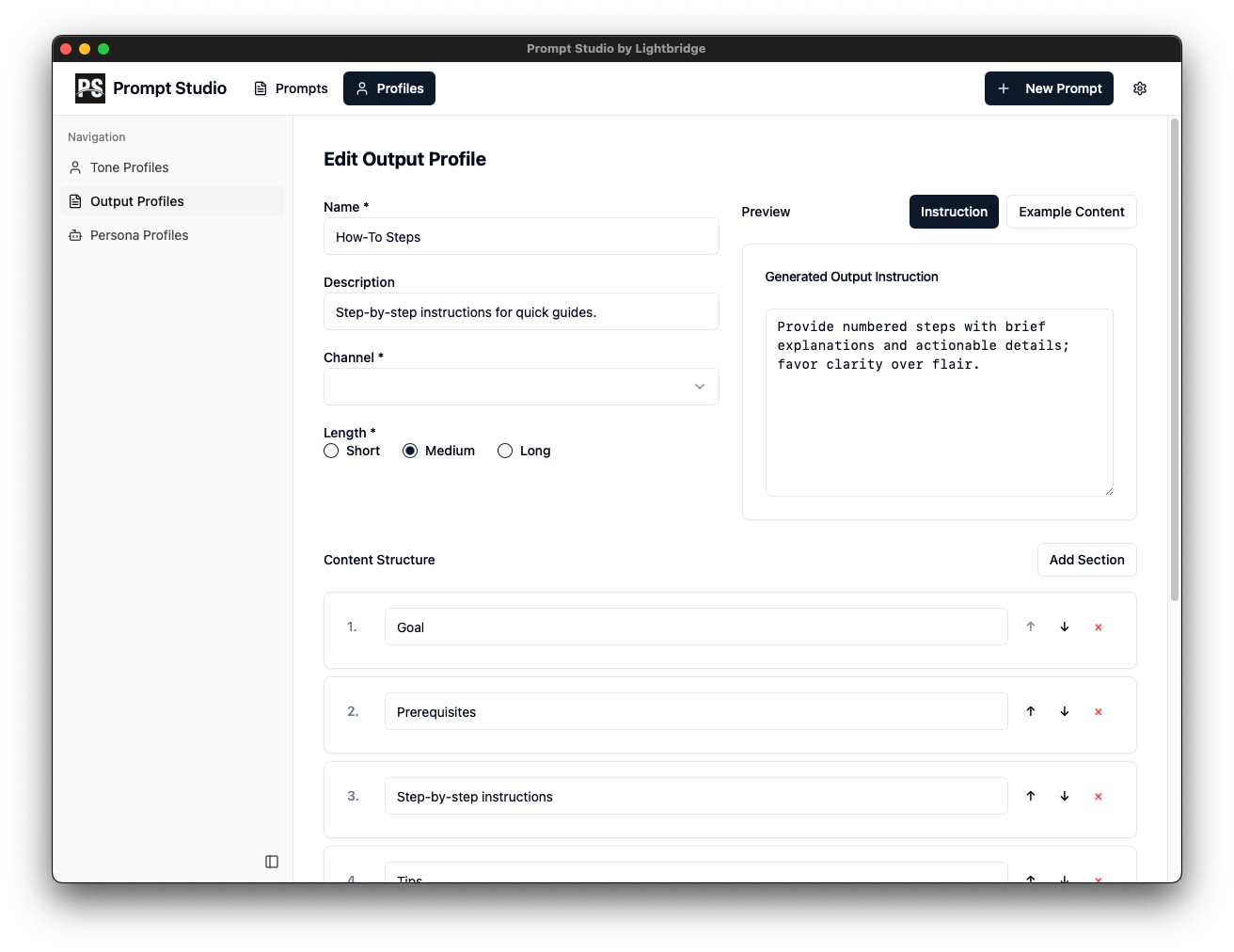

Output Profiles

Define structured output formats for consistent results.

Preview

Edit Output Profiles

Configure output structure and formatting preferences.

Preview

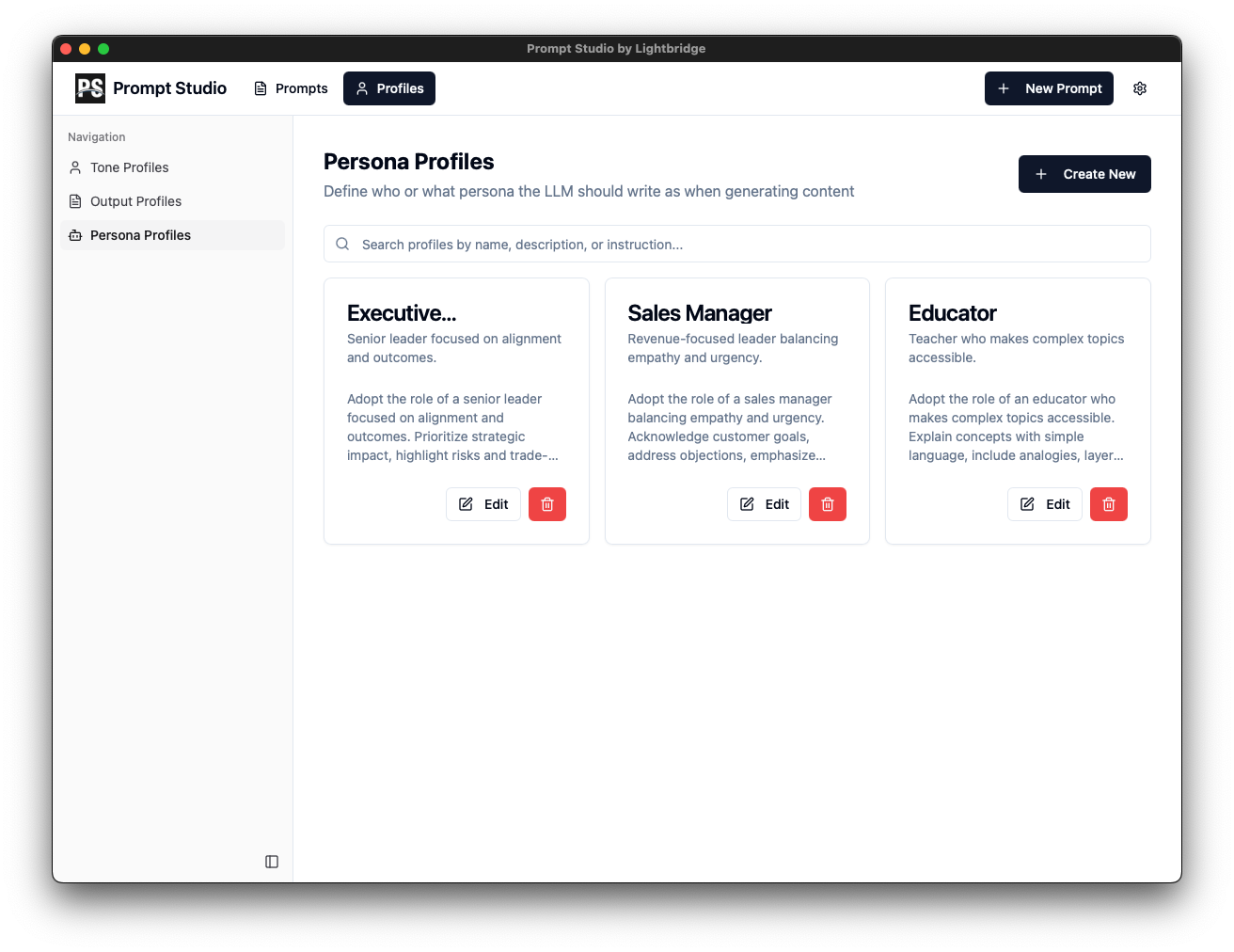

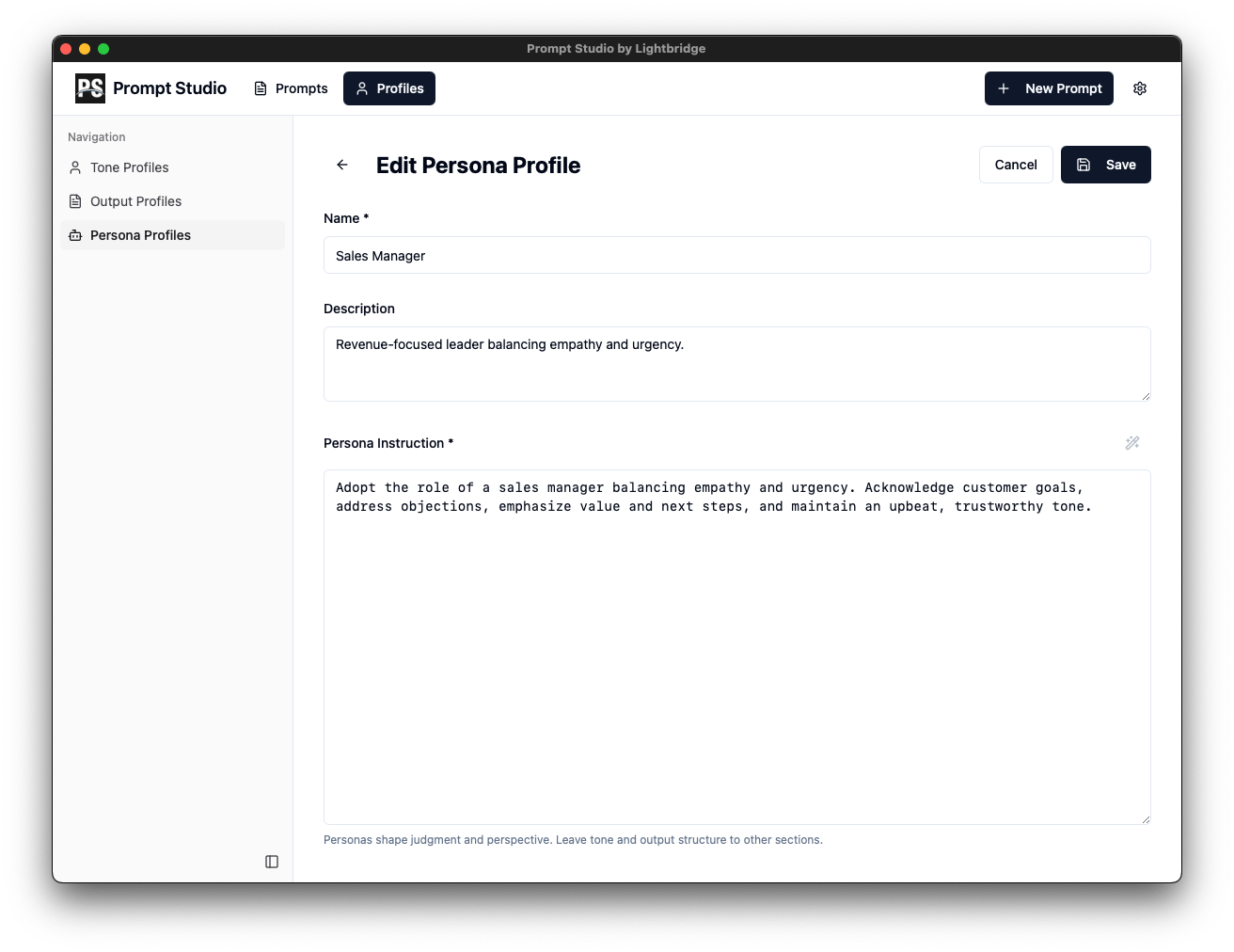

Persona Profiles

Create personas to guide AI responses for different contexts.

Preview

Edit Persona Profiles

Define personality traits and behavior for your AI personas.

Preview

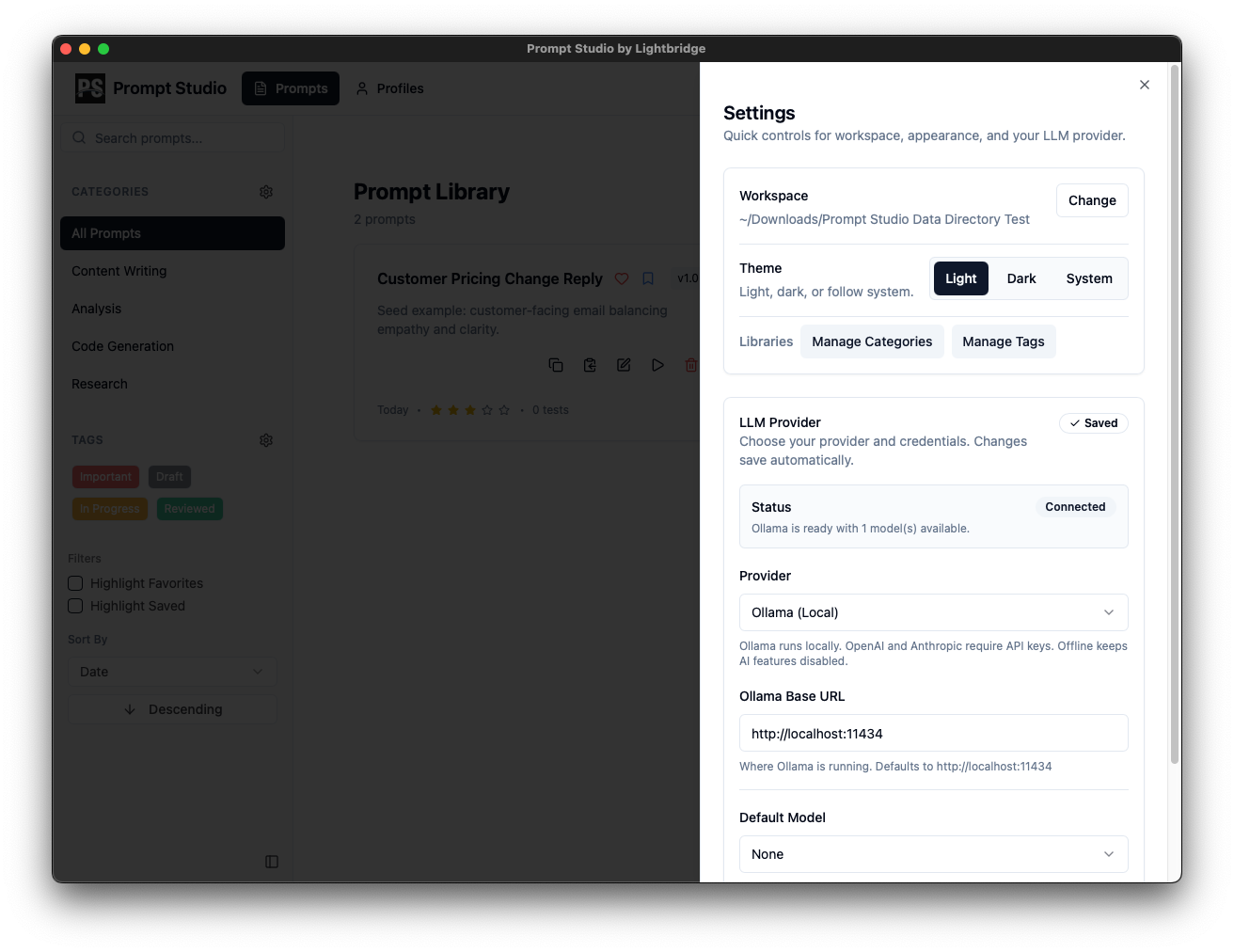

Settings

Configure preferences, model connections, and privacy controls.

Why now

Prompt work deserves the same rigor as code.

Teams keep experimenting in web chat UIs without auditability or privacy controls. Prompt Studio brings local-first guardrails so you can move fast and keep data safe.

Prompts live in the cloud

Sensitive context leaks into hosted tools and chat logs.

No repeatable tests

Results shift between runs without baselines or evals.

Scattered assets

Prompts, notes, and scripts end up across repos and docs.

What Prompt Studio does

A private control room for prompt design, testing, and shipping.

Keep your prompt lifecycle in one local tool. Capture context, version prompts, and run automated checks before anything leaves your machine.

Local-only workspace

Work entirely on-device with controllable model endpoints and zero third-party storage.

Prompt pipelines

Compose prompts, contexts, and guards as repeatable flows you can re-run or schedule.

Versioned artifacts

Commit prompts, tests, and datasets together so every release has an audit trail.

Evaluation baked in

Ship only when acceptance checks and red-team suites stay green.

Capabilities

Everything you need to keep prompts reliable.

From individual prompt sketching to production rollouts, Prompt Studio keeps the loop tight and private.

Tone Profiles from Samples

Turn writing samples into reusable tone instructions that capture your unique voice.

Versioned Prompt Library

Branch and compare versions while keeping the full history. Git-friendly and audit-ready.

Organize by category, tag, and rating

Everything searchable with tags, categories, and ratings so you can find what you need.

Secure by default

Runs fully local with explicit network toggles for any outbound model calls.

Evaluation suites

Red-team and regression checks with pass/fail gates before deploy.

Offline friendly

No cloud login required. Works even when you are disconnected.

Privacy first

Your prompts never leave your machine.

Prompt Studio ships as a locally installed app. Telemetry is off by default. When you opt in, only anonymous performance metrics are sent—no prompts, datasets, or identifiers ever leave the device.

Data ownership

You keep the keys

- No cloud sync unless you explicitly connect your own storage.

- Model credentials and datasets stay encrypted locally.

- Observability panels only show what you choose to log.

When enabled, only anonymous performance data is sent: app version, OS, GPU/CPU presence, and crash dumps with sensitive strings stripped.

FAQ

Answers to common questions.

Still curious? Reach out and we will share the security note, deployment guide, or roadmap details you need.

Is Prompt Studio cloud dependent?

No. Everything runs locally by default. You can connect external endpoints you control, but nothing leaves your device without you enabling it.

Which models does it support?

Anything with an OpenAI-compatible API, plus local models via your own runtimes. Bring your keys or point to self-hosted gateways.

How do evaluations work?

Create suites of test prompts and acceptance criteria, then run them against prompt versions. Results gate releases and can be run in CI.

Can multiple people collaborate?

Yes. Projects are file-based and Git-friendly, so teams can branch, review, and merge prompt changes like code.

Is telemetry required?

Telemetry is off by default. If you opt in, only anonymous performance metrics are shared—never raw prompts or datasets.

Which platforms are supported?

macOS is available today, with Linux and Windows builds in early preview.

Get started

Bring your prompt workflow home.

Download Prompt Studio and keep your prompts, tests, and logs private from day one.

Apple Silicon • Windows & Linux coming soon